NVIDIA Investor Day Presentation Deck

Training Compute (petaFLOPS)

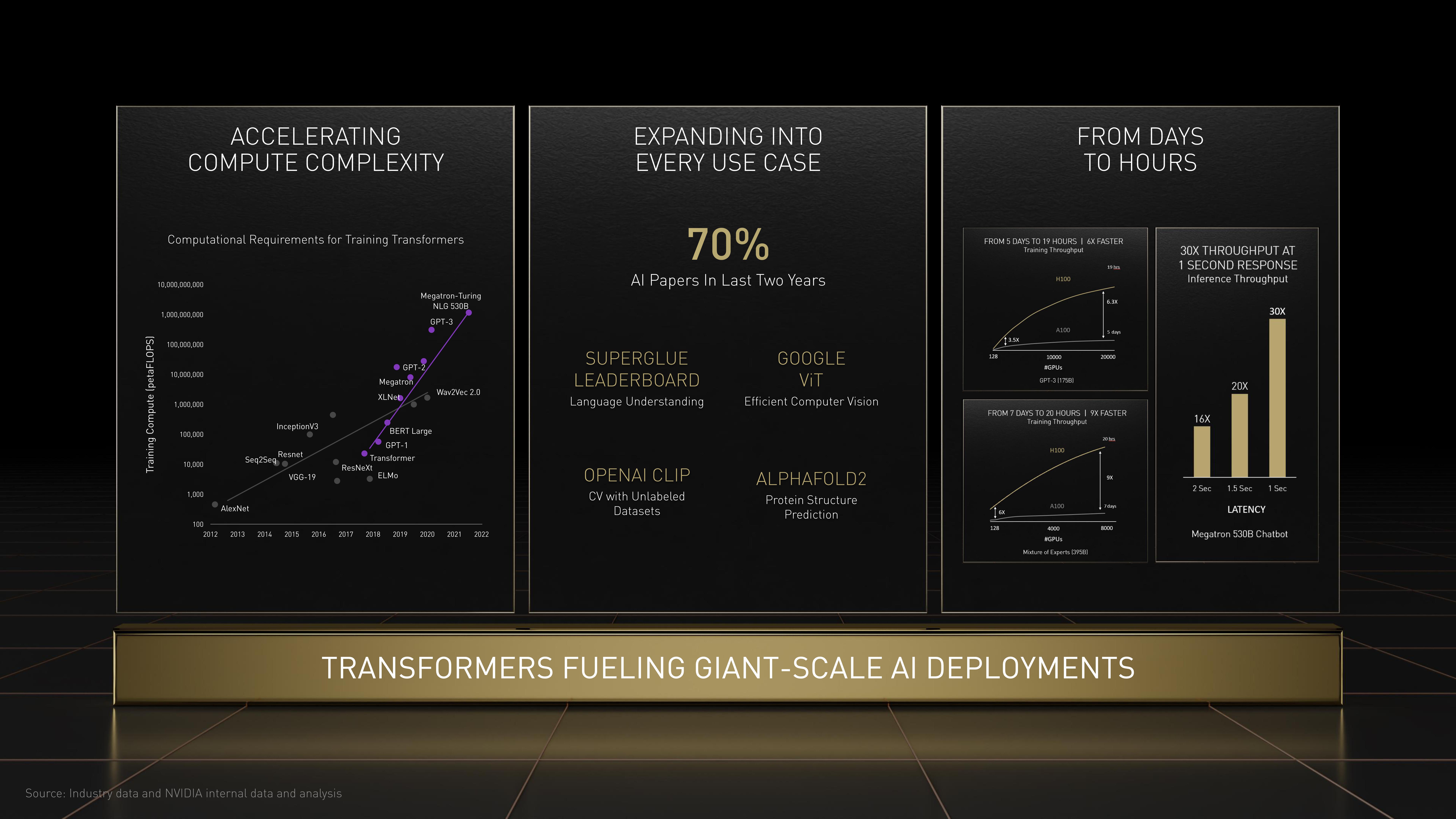

COMPUTE COMPLEXITY

Computational Requirements for Training Transformers

10,000,000,000

1,000,000,000

100,000,000

10.000.000

1,000,000

100,000

ACCELERATING

10,000

1,000

InceptionV3

AlexNet

Resnet

Seq2Seq

VGG-19

100

2012 2013 2014 2015 2016

ResNeXt

GPT-2

Megatron

XLNet

Source: Industry data and NVIDIA internal data and analysis

Megatron-Turing

NLG 530B

GPT-3

• Transformer

ELMO

BERT Large

GPT-1

Wav2Vec 2.0

2017 2018 2019 2020 2021 2022

EXPANDING INTO

EVERY USE CASE

70%

Al Papers In Last Two Years

SUPERGLUE

LEADERBOARD

Language Understanding

OPENAI CLIP

CV with Unlabeled

Datasets

GOOGLE

VIT

Efficient Computer Vision

ALPHAFOLD2

Protein Structure

Prediction

FROM 5 DAYS TO 19 HOURS | 6X FASTER

Training Throughput

6x

3.5X

128

H100

A100

10000

#GPUs

GPT-3 (1758)

FROM DAYS

TO HOURS

H100

A100

FROM 7 DAYS TO 20 HOURS I 9X FASTER

Training Throughput

19 hrs

#GPUs

Mixture of Experts (3958)

6.3X

5 days

20000

20 hrs

7days

8000

TRANSFORMERS FUELING GIANT-SCALE AI DEPLOYMENTS

30X THROUGHPUT AT

1 SECOND RESPONSE

Inference Throughput

16X

2 Sec

20X

1.5 Sec

30X

1 Sec

LATENCY

Megatron 530B ChatbotView entire presentation