Experian ESG Presentation Deck

Executive Summary Improving Financial Health

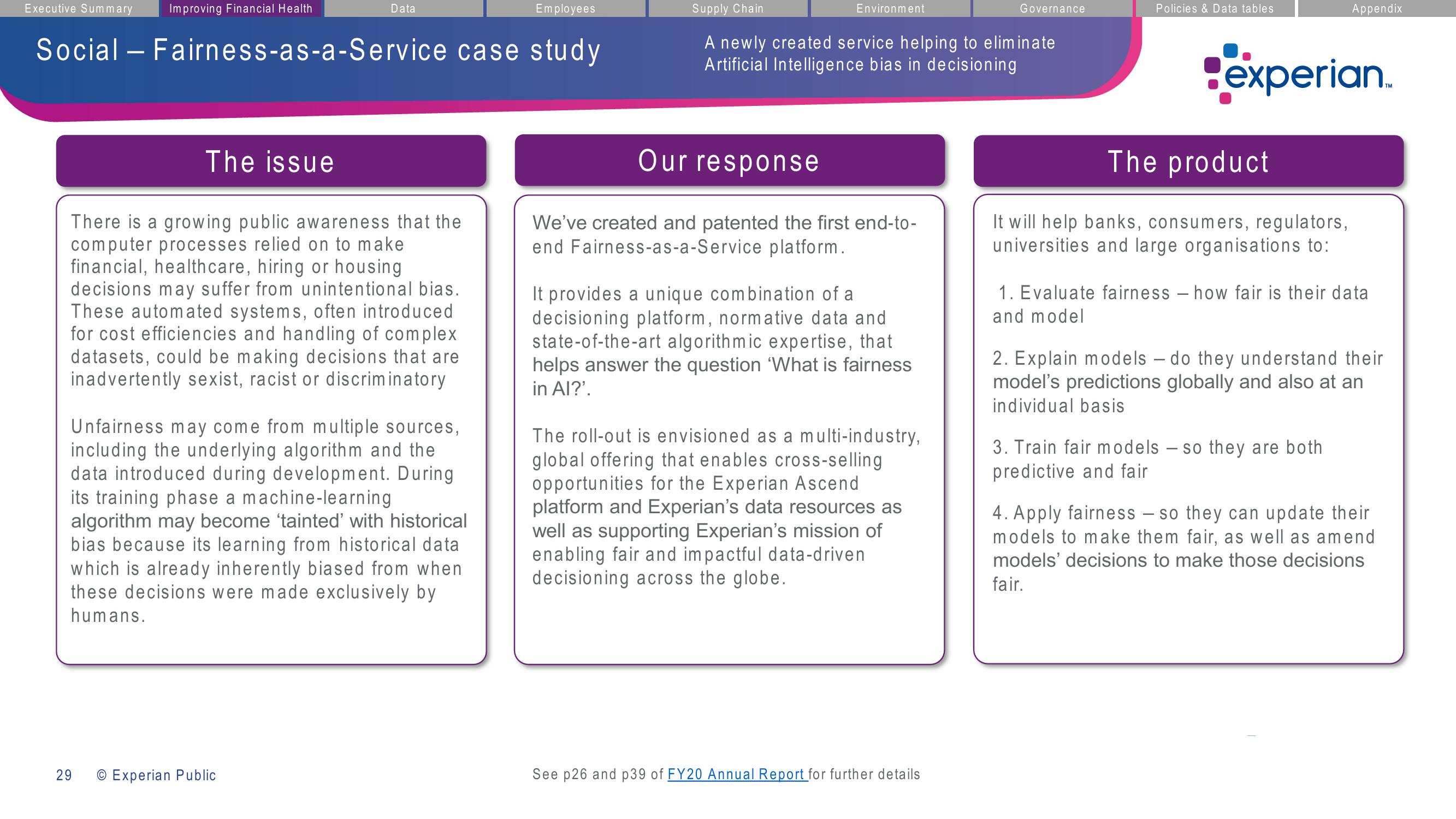

Social - Fairness-as-a-Service case study

The issue

There is a growing public awareness that the

computer processes relied on to make

financial, healthcare, hiring or housing

decisions may suffer from unintentional bias.

These automated systems, often introduced

for cost efficiencies and handling of complex

datasets, could be making decisions that are

inadvertently sexist, racist or discriminatory

29

Data

Unfairness may come from multiple sources,

including the underlying algorithm and the

data introduced during development. During

its training phase a machine-learning

algorithm may become 'tainted' with historical

bias because its learning from historical data

which is already inherently biased from when

these decisions were made exclusively by

humans.

O Experian Public

Employees

Supply Chain

A newly created service helping to eliminate

Artificial Intelligence bias in decisioning

Environment

Our response

We've created and patented the first end-to-

end Fairness-as-a-Service platform.

It provides a unique combination of a

decisioning platform, normative data and

state-of-the-art algorithmic expertise, that

helps answer the question 'What is fairness

in Al?'.

The roll-out is envisioned as a multi-industry,

global offering that enables cross-selling

opportunities for the Experian Ascend

platform and Experian's data resources as

well as supporting Experian's mission of

enabling fair and impactful data-driven

decisioning across the globe.

See p26 and p39 of FY20 Annual Report for further details

Governance

Policies & Data tables

experian.

The product

It will help banks, consumers, regulators,

universities and large organisations to:

Appendix

1. Evaluate fairness - how fair is their data

and model

2. Explain models - do they understand their

model's predictions globally and also at an

individual basis

3. Train fair models - so they are both

predictive and fair

4. Apply fairness - so they can update their

models to make them fair, as well as amend

models' decisions to make those decisions

fair.View entire presentation