NVIDIA Investor Presentation Deck

Training Compute (petaFLOPS)

1010

10⁹

108

107

106

105

104

103

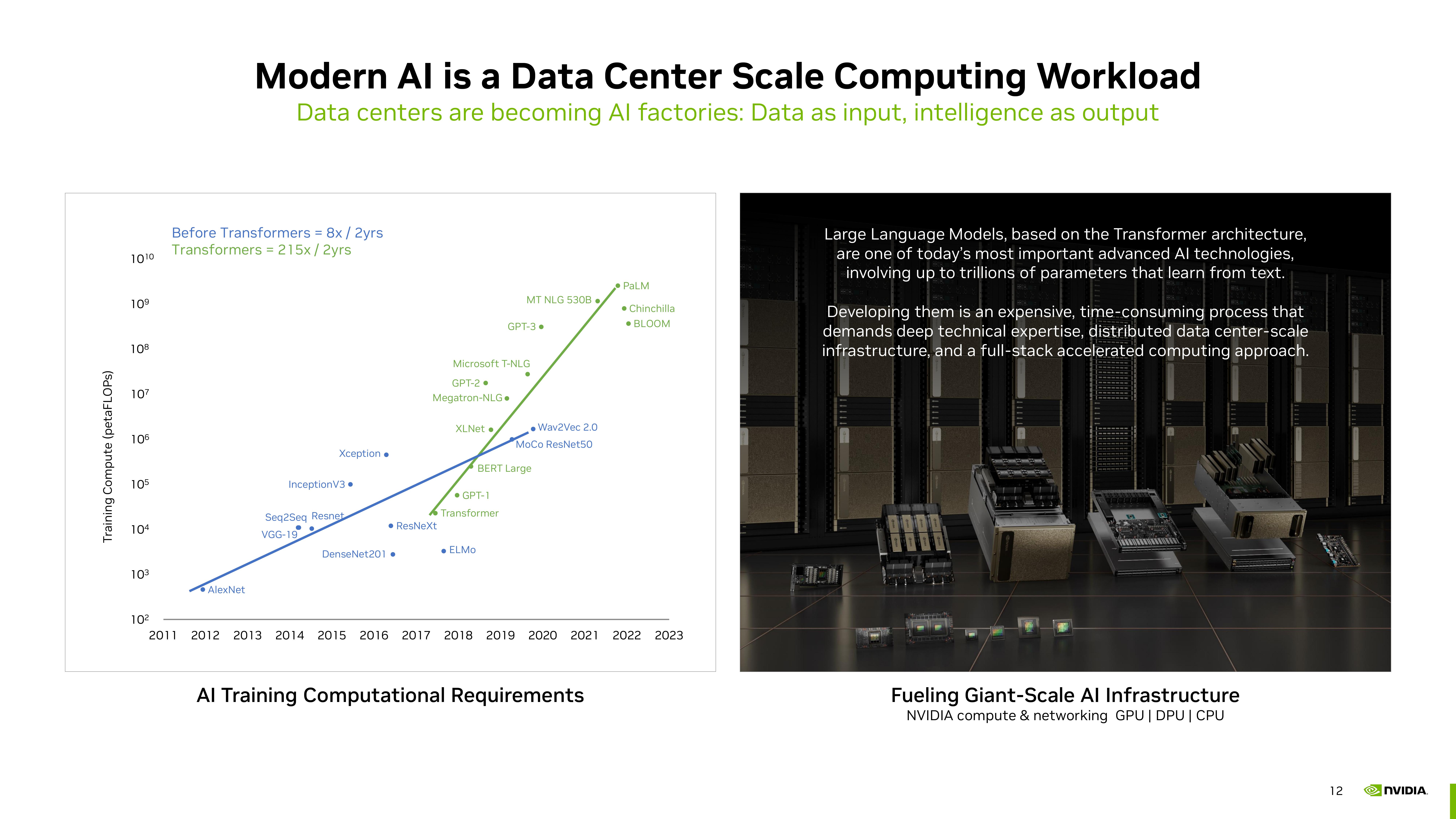

Modern Al is a Data Center Scale Computing Workload

Data centers are becoming Al factories: Data as input, intelligence as output

Before Transformers = 8x/ 2yrs

Transformers = 215x/2yrs

AlexNet

Xception.

InceptionV3.

Seq2Seq Resnet

VGG-19

• ResNeXt

DenseNet201.

Megatron-NLG.

Microsoft T-NLG

GPT-2.

XLNet.

MT NLG 530B.

GPT-3.

GPT-1

Transformer

• ELMO

Wav2Vec 2.0

MoCo ResNet50

BERT Large

PaLM

Al Training Computational Requirements

Chinchilla

• BLOOM

10²

2011 2012 2013 2014 2015 2016 2017 2018 2019 2020 2021 2022 2023

Large Language Models, based on the Transformer architecture,

are one of today's most important advanced Al technologies,

involving up to trillions of parameters that learn from text.

Developing them is an expensive, time-consuming process that

demands deep technical expertise, distributed data center-scale

infrastructure, and a full-stack accelerated computing approach.

Fueling Giant-Scale Al Infrastructure

NVIDIA compute & networking GPU | DPU | CPU

12

NVIDIAView entire presentation